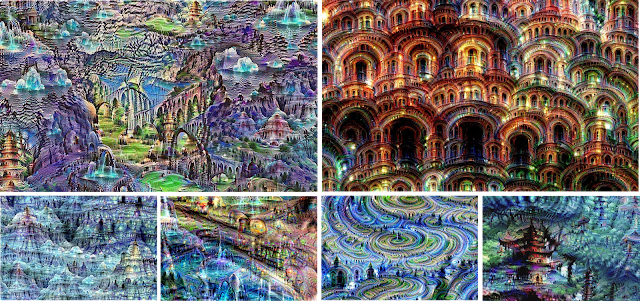

If we see with our minds, not our eyes – so do computers…

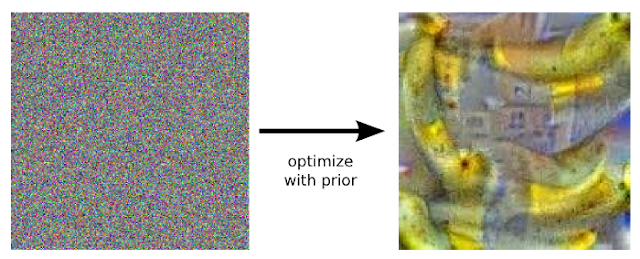

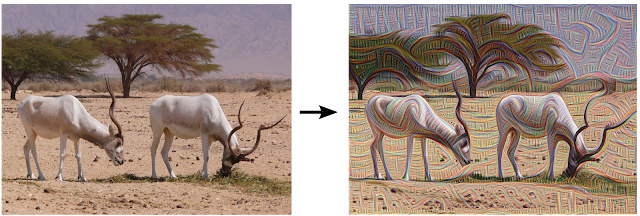

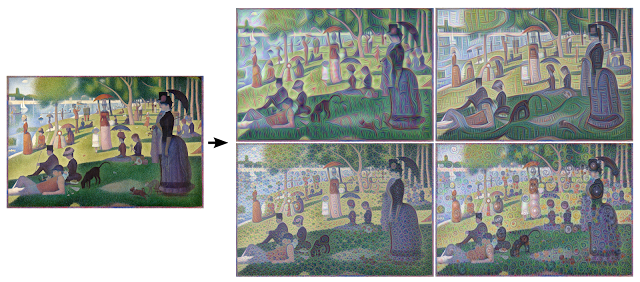

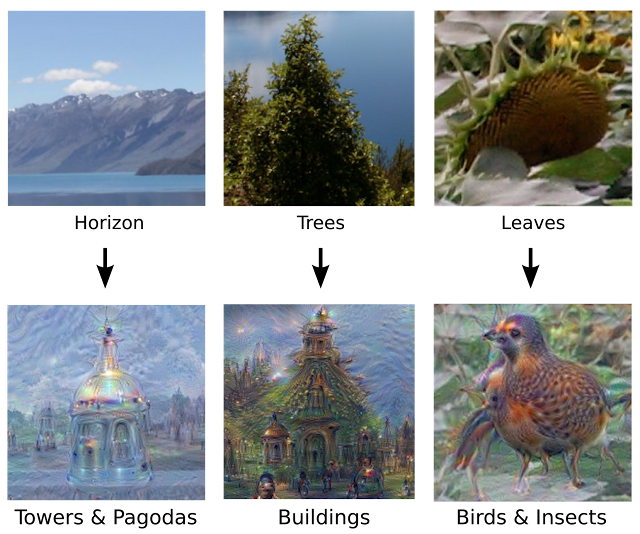

Posted on Google research blog, researchers posted how neural networks trained to classify images interpret and re-interpret image abstractions, as some examples below.

not bad idea from a friend who immediately identified it (especially bottom examples) with this image search:

|

| Left: Original photo by Zachi Evenor. Right: processed by Günther Noack, Software Engineer |

|

| Left: Original painting by Georges Seurat. Right: processed images by Matthew McNaughton, Software Engineer |

|

| The original image influences what kind of objects form in the processed image. |

|

| Neural net “dreams”— generated purely from random noise, using a network trained on places by MIT Computer Science and AI Laboratory. See our Inceptionism gallery for hi-res versions of the images above and more (Images marked “Places205-GoogLeNet” were made using this network). |

“A New Theory of Distraction” BY JOSHUA ROTHMAN (New Yorker)

“…Like typing, Googling, and driving, distraction is now a universal competency. We’re all experts.

Still, for all our expertise, distraction retains an aura of mystery. It’s hard to define: it can be internal or external, habitual or surprising, annoying or pleasurable. It’s shaped by power: where a boss sees a distracted employee, an employee sees a controlling boss. Often, it can be useful: my dentist, who used to be a ski instructor, reports that novice skiers learn better if their teachers, by talking, distract them from the fact that they are sliding down a mountain. (…)

Another source of confusion is distraction’s apparent growth. There are two big theories about why it’s on the rise. The first is material: it holds that our urbanized, high-tech society is designed to distract us. (…) The second big theory is spiritual—it’s that we’re distracted because our souls are troubled. (…). It’s not a competition, though; in fact, these two problems could be reinforcing each other. Stimulation could lead to ennui, and vice versa.

A version of that mutual-reinforcement theory is more or less what Matthew Crawford proposes in his new book, “The World Beyond Your Head: Becoming an Individual in an Age of Distraction” (Farrar, Straus & Giroux). (…) Ever since the Enlightenment, he writes, Western societies have been obsessed with autonomy, and in the past few hundred years we have put autonomy at the center of our lives, economically, politically, and technologically; often, when we think about what it means to be happy, we think of freedom from our circumstances. Unfortunately, we’ve taken things too far: we’re now addicted to liberation, and we regard any situation—a movie, a conversation, a one-block walk down a city street—as a kind of prison. Distraction is a way of asserting control; it’s autonomy run amok. Technologies of escape, like the smartphone, tap into our habits of secession.

The way we talk about distraction has always been a little self-serving—we say, in the passive voice, that we’re “distracted by” the Internet or our cats, and this makes us seem like the victims of our own decisions. But Crawford shows that this way of talking mischaracterizes the whole phenomenon…” read full story

Injectable Nanowire mesh to stimulate and study neuron activity

“Syringe-injectable electronics” a paper published in nature nanotechnology describes a new technique that allow scientists to introduce a nanoparticle mesh into the brain.

The structure could allow not only to register, transmit and record neural activity but also eventually be used to stimulate neurons with a precision beyond our current skills. It’s low degree of invasiveness and rejection makes the possibility of applying such studies in normal, functional beings during usual activities.

“Teaching Machines to Read and Comprehend”

As reported in MIT Technology Review “Google DeepMind Teaches Artificial Intelligence Machines to Read” and pointed out by an attentive and informed friend, researchers from University of Oxford and Google Deepmind: Karl Moritz Hermann, Tomas Kocisky, Edward Grefenstette, Lasse Espeholt, Will Kay, Mustafa Suleyman, Phil Blunsom published a paper showing progress in Machine Learning techniques to help computer reading comprehension of meaning.

Deep learning applied different models such as Neural Network and Symbolic Marching to act as reading agents. Agents would then predict an output in form of abstract summary points.

Supervised learning used market, previously annoted data from CNNonline, Daily Mail website and MailOnline news feed.

Here’s the abstract as published:

“Teaching machines to read natural language documents remains an elusive challenge.

Machine reading systems can be tested on their ability to answer questions

posed on the contents of documents that they have seen, but until now large scale

training and test datasets have been missing for this type of evaluation. In this

work we define a new methodology that resolves this bottleneck and provides

large scale supervised reading comprehension data. This allows us to develop a

class of attention based deep neural networks that learn to read real documents and

answer complex questions with minimal prior knowledge of language structure.”

“Apple versus Google” by OM MALIK (New Yorker)

“In the past two weeks, executives from both Apple and Google have taken the stage at the Moscone Center, in San Francisco, to pump up their troops, their developer corps, and the media. What struck me first in this technology lollapalooza was how similar the events were.(…)

Sure, Google’s and Apple’s ecosystems look a little different, but they are meant to do pretty much the same thing. For the two companies, innovation on mobile essentially means catching up to the other’s growing list of features. (…). Even their products sound remarkably the same. Apple Pay. Android Pay. Apple Photos. Google Photos. Apple Wallet. Google Wallet. Google announced its “Internet of things” efforts, such as Weave and Brillo. Apple came back with HomeKit. Android in the car. Apple in the car. At the Google I/O conference, Google announced app-focussed search and an invisible interface that allows us to get vital information without opening an application. There was an improved Google Now (a.k.a. Now On Tap). Apple announced a new, improved Siri. It also announced Proactive Assistant. Google launched Photos, which can magically sort, categorize, and even search thousands of photos using voice commands. Apple improved its Photos app—you can search them using Siri.

These two companies are very much alike—the Glimmer Twins of our appy times. And, like any set of twins, if you look closely you start to see the differences.” read full article

It seems we can’t find what you’re looking for. Perhaps searching can help.