“Your toaster will soon talk to your toothbrush and your bathroom scale. They will all have a direct line to your car and to the health sensors in your smartphone. I have no idea what they will think of us or what they will gossip about, but our devices will be soon be sharing information about us — with each other and with the companies that make or support them.”… read more

Category Archives: Readings

“Growing Pains for Deep Learning” By Chris Edwards

“Advances in theory and computer hardware have allowed neural networks to become a core part of online services such as Microsoft’s Bing, driving their image-search and speech-recognition systems. The companies offering such capabilities are looking to the technology to drive more advanced services in the future, as they scale up the neural networks to deal with more sophisticated problems.

It has taken time for neural networks, initially conceived 50 years ago, to become accepted parts of information technology applications. After a flurry of interest in the 1990s, supported in part by the development of highly specialized integrated circuits designed to overcome their poor performance on conventional computers, neural networks were outperformed by other algorithms, such as support vector machines in image processing and Gaussian models in speech recognition.” read more

Can we reason physics without causality?

In this article published in aeon, Mathias Frisch discusses role of causality in shaping our knowledge.

“…In short, a working knowledge of the way in which causes and effects relate to one another seems indispensable to our ability to make our way in the world. Yet there is a long and venerable tradition in philosophy, dating back at least to David Hume in the 18th century, that finds the notions of causality to be dubious. And that might be putting it kindly.

Hume argued that when we seek causal relations, we can never discover the real power; the, as it were, metaphysical glue that binds events together. All we are able to see are regularities – the ‘constant conjunction’ of certain sorts of observation. …Which is not to say that he was ignorant of the central importance of causal reasoning… Causal reasoning was somehow both indispensable and illegitimate. We appear to have a dilemma.

… causes seemed too vague for a mathematically precise science. If you can’t observe them, how can you measure them? If you can’t measure them, how can you put them in your equations? Second, causality has a definite direction in time: causes have to happen before their effects. Yet the basic laws of physics (as distinct from such higher-level statistical generalisations as the laws of thermodynamics) appear to be time-symmetric…

…

Neo-Russellians in the 21st century express their rejection of causes …

This is all very puzzling. Is it OK to think in terms of causes or not? If so, why, given the apparent hostility to causes in the underlying laws? And if not, why does it seem to work so well?” read more

“Future A.I. Will Be Able to Generate Photos We Need Out of Nothing” by Paul Melcher

“…Photo licensing companies like Shutterstock or Getty Images could let go of their tens of thousand of contributors and tens of millions of stored images and replace them with a smart bot that can instantly create the exact image you are looking for.

Think this idea is crazy? Think again. Already text algorithms create entire human readable articles from raw data they gather around a sporting event or a company’s stock. Automatically. Chances are, you’ve already read a computer generated article without even knowing it.

CGI is now so advanced that entire movies are filmed in front of a green backdrop before being completed with the necessary reality elements we would swear are true. Skilled Photoshop artists can already merge different photos to create a new one, fabricating a true-to-life scene that never existed. IKEA revealed last year that 75% of their catalog photos are not real, but are instead entirely computer generated.” read article at petapixel

Experiments bring light to near-sleep brain dynamic.

“Co-activated yet disconnected—Neural correlates of eye closures when trying to stay awake” by

Ju Lynn Onga, Danyang Konga, Tiffany T.Y. Chiaa, Jesisca Tandia, B.T. Thomas Yeoa, b, Michael W.L. Chee published at Neuro Image studies brains activity related to sleep-deprived participants in the experiments.

Of course it’s no news that when sleep-deprived and approaching sleep with spontaneous eye closures we have a somewhat less connected and aware brain. But this borderline state brings hurdles to collecting data and this paper bring new scientific data on the table.

“Improved Semantic Representations From Tree-Structured Long Short-Term Memory Networks”

by Kai Sheng Tai, Richard Socher, Christopher D. Manning.

Paper introducing a generalization of Long-Short Term Memory networks via tree-structured topology. Publication states that “Tree-LSTMs outperform all existing systems and strong LSTM baselines on two tasks: predicting the semantic relatedness of two sentences (SemEval 2014, Task 1) and sentiment classification (Stanford Sentiment Treebank)”

A companion set of code is also available.

-Machine: i ’m not ashamed of being a philosopher!

Google Brain researchers Oriol Vinyals and Quoc V. Le puyblished “A Neural Conversational Model“. Thye describe their experiment in having artificial intelligence learning to simulate human conversation.

Good short reading, even if to find examples as:

Human: what year were you born ?

Machine: 1977 .

…

Human: why are we here ?

Machine: i ’m not sure .

or

Human: what is the purpose of living ?

Machine: to live forever .

or

Human: give me some examples of moral actions…

Machine: i ’m not a moralist .

…

Human: be moral !

Machine: be a man !

Scientific Papers Corrections Stable Retractions on the Rise

“Why Growing Retractions Are (Mostly) a Good Sign”, by Daniele Fanelli, studies statistics of retractions and correction in scientific papers in recent history.

This paper tells us that the growing incidence of retractions is probably due to better fraud and gerneral content check. Something to be celebrated as good news, and ought to be promoted.

“Welcome to the Dawn of the Age of Robots” by VIVEK WADHWA

Article from singularityhub.com DARPA robotics challenge.

“Can We Design Trust Between Humans and Artificial Intelligence?” by Patrick Mankins

Desinger Patrick Mankins article on building trust between people and Artificial Intelligence.

Excerpts below, but read full article – it’s not long anyway:

“Machine learning and cognitive systems are now a major part many products people interact with every da…. The role of designers is to figure out how to build collaborative relationships between people and machines that help smart systems enhance human creativity and agency rather than simply replacing them.

… before self-driving cars can really take off, people will probably have to trust their cars to make complex, sometimes moral, decisions on their behalf, much like when another person is driving.

Creating a feedback loop

This also takes advantage of one of the key distinguishing capabilities of many AI systems: they know when they don’t understand something. Once a system gains this sort of self-awareness, a fundamentally different kind interaction is possible.

Building trust and collaboration

What is it that makes getting on a plane or a bus driven by a complete stranger something people don’t even think twice about, while the idea of getting into a driverless vehicle causes anxiety? … We understand why people behave the way they do on an intuitive level, and feel like we can predict how they will behave. We don’t have this empathy for current smart systems.”

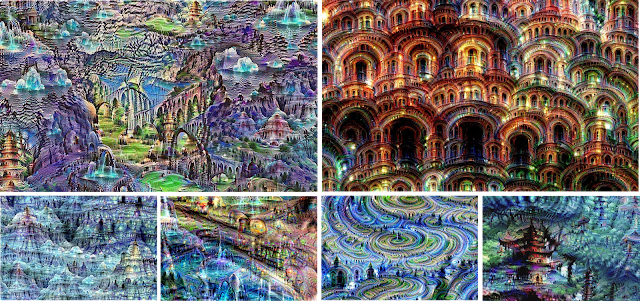

If we see with our minds, not our eyes – so do computers…

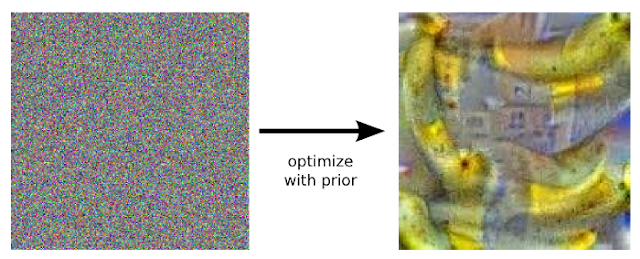

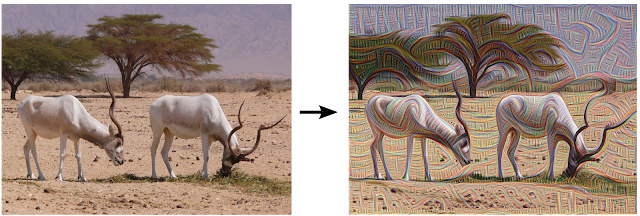

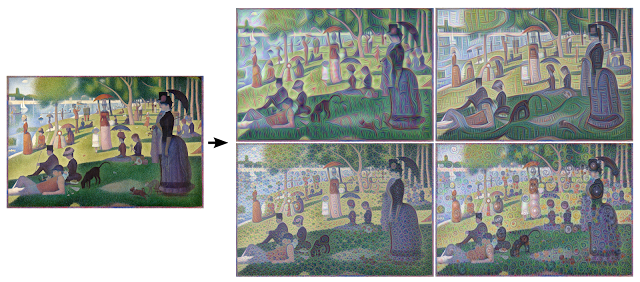

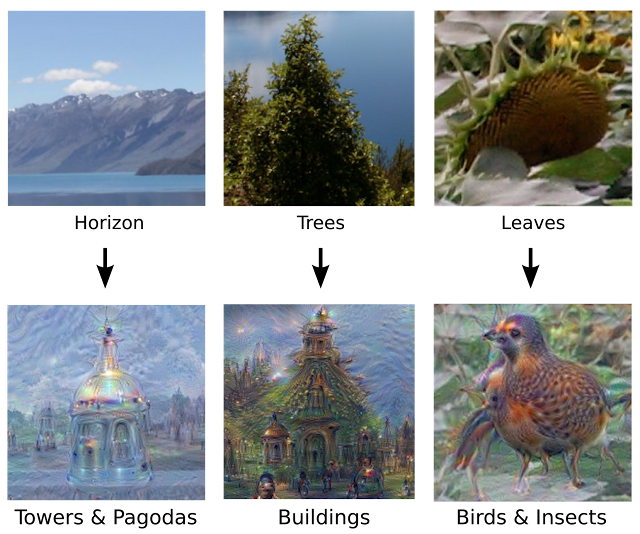

Posted on Google research blog, researchers posted how neural networks trained to classify images interpret and re-interpret image abstractions, as some examples below.

not bad idea from a friend who immediately identified it (especially bottom examples) with this image search:

|

| Left: Original photo by Zachi Evenor. Right: processed by Günther Noack, Software Engineer |

|

| Left: Original painting by Georges Seurat. Right: processed images by Matthew McNaughton, Software Engineer |

|

| The original image influences what kind of objects form in the processed image. |

|

| Neural net “dreams”— generated purely from random noise, using a network trained on places by MIT Computer Science and AI Laboratory. See our Inceptionism gallery for hi-res versions of the images above and more (Images marked “Places205-GoogLeNet” were made using this network). |

“A New Theory of Distraction” BY JOSHUA ROTHMAN (New Yorker)

“…Like typing, Googling, and driving, distraction is now a universal competency. We’re all experts.

Still, for all our expertise, distraction retains an aura of mystery. It’s hard to define: it can be internal or external, habitual or surprising, annoying or pleasurable. It’s shaped by power: where a boss sees a distracted employee, an employee sees a controlling boss. Often, it can be useful: my dentist, who used to be a ski instructor, reports that novice skiers learn better if their teachers, by talking, distract them from the fact that they are sliding down a mountain. (…)

Another source of confusion is distraction’s apparent growth. There are two big theories about why it’s on the rise. The first is material: it holds that our urbanized, high-tech society is designed to distract us. (…) The second big theory is spiritual—it’s that we’re distracted because our souls are troubled. (…). It’s not a competition, though; in fact, these two problems could be reinforcing each other. Stimulation could lead to ennui, and vice versa.

A version of that mutual-reinforcement theory is more or less what Matthew Crawford proposes in his new book, “The World Beyond Your Head: Becoming an Individual in an Age of Distraction” (Farrar, Straus & Giroux). (…) Ever since the Enlightenment, he writes, Western societies have been obsessed with autonomy, and in the past few hundred years we have put autonomy at the center of our lives, economically, politically, and technologically; often, when we think about what it means to be happy, we think of freedom from our circumstances. Unfortunately, we’ve taken things too far: we’re now addicted to liberation, and we regard any situation—a movie, a conversation, a one-block walk down a city street—as a kind of prison. Distraction is a way of asserting control; it’s autonomy run amok. Technologies of escape, like the smartphone, tap into our habits of secession.

The way we talk about distraction has always been a little self-serving—we say, in the passive voice, that we’re “distracted by” the Internet or our cats, and this makes us seem like the victims of our own decisions. But Crawford shows that this way of talking mischaracterizes the whole phenomenon…” read full story

Injectable Nanowire mesh to stimulate and study neuron activity

“Syringe-injectable electronics” a paper published in nature nanotechnology describes a new technique that allow scientists to introduce a nanoparticle mesh into the brain.

The structure could allow not only to register, transmit and record neural activity but also eventually be used to stimulate neurons with a precision beyond our current skills. It’s low degree of invasiveness and rejection makes the possibility of applying such studies in normal, functional beings during usual activities.

“Teaching Machines to Read and Comprehend”

As reported in MIT Technology Review “Google DeepMind Teaches Artificial Intelligence Machines to Read” and pointed out by an attentive and informed friend, researchers from University of Oxford and Google Deepmind: Karl Moritz Hermann, Tomas Kocisky, Edward Grefenstette, Lasse Espeholt, Will Kay, Mustafa Suleyman, Phil Blunsom published a paper showing progress in Machine Learning techniques to help computer reading comprehension of meaning.

Deep learning applied different models such as Neural Network and Symbolic Marching to act as reading agents. Agents would then predict an output in form of abstract summary points.

Supervised learning used market, previously annoted data from CNNonline, Daily Mail website and MailOnline news feed.

Here’s the abstract as published:

“Teaching machines to read natural language documents remains an elusive challenge.

Machine reading systems can be tested on their ability to answer questions

posed on the contents of documents that they have seen, but until now large scale

training and test datasets have been missing for this type of evaluation. In this

work we define a new methodology that resolves this bottleneck and provides

large scale supervised reading comprehension data. This allows us to develop a

class of attention based deep neural networks that learn to read real documents and

answer complex questions with minimal prior knowledge of language structure.”

“Apple versus Google” by OM MALIK (New Yorker)

“In the past two weeks, executives from both Apple and Google have taken the stage at the Moscone Center, in San Francisco, to pump up their troops, their developer corps, and the media. What struck me first in this technology lollapalooza was how similar the events were.(…)

Sure, Google’s and Apple’s ecosystems look a little different, but they are meant to do pretty much the same thing. For the two companies, innovation on mobile essentially means catching up to the other’s growing list of features. (…). Even their products sound remarkably the same. Apple Pay. Android Pay. Apple Photos. Google Photos. Apple Wallet. Google Wallet. Google announced its “Internet of things” efforts, such as Weave and Brillo. Apple came back with HomeKit. Android in the car. Apple in the car. At the Google I/O conference, Google announced app-focussed search and an invisible interface that allows us to get vital information without opening an application. There was an improved Google Now (a.k.a. Now On Tap). Apple announced a new, improved Siri. It also announced Proactive Assistant. Google launched Photos, which can magically sort, categorize, and even search thousands of photos using voice commands. Apple improved its Photos app—you can search them using Siri.

These two companies are very much alike—the Glimmer Twins of our appy times. And, like any set of twins, if you look closely you start to see the differences.” read full article

“What Happens When We Upload Our Minds?” by MADDIE STONE

Article from Motherboard:

“Since the dawn of computer science, humans have dreamt of building machines that can carry our memories and preserve our minds after our fleshy bodies decay. Whole brain emulation, or mind uploading, still has the ring of science fiction. And yet, some of the world’s leading neuroscientists believe the technology to transfer our brains to computers is not far off.

But if we could upload our minds, should we? Some see uploading as the next chapter in human evolution. Others fear the promise of immortality has been oversold, and that sending our brains off to the cloud without carefully weighing the consequences could be disastrous.

(…)

“The mind is based on the brain, and the brain, like all biology, is a kind of machinery,” (,,,) “Which means we’re talking about information processing in a machine.”

What part of ‘Stop!’ means ‘Stop!’ can’t someone get?

Making herself yet more exposed Emma Sulkowicz bravely publishes this video and in an effort to bring attention to rape and how this is tacitly approved by ‘modern’ society.

By posting the video she is now exposed to agression by virtual – as if very real and phisical were not enough – means of hatred.

As to many women guilty of thinking – and speaking up – for themselves this is bound to trigger tons of cynicism.

Legitimate questioning whether this is best form to push debate about rape in society may rise as well, and in this case it is possible that part of what appears to be Emma’s goal – bring attention to the cause – is achieved.

LAUDATO SI – On care for our common home

Encyclical letter of Pope Francis.

On ecology, economic justice, and how humans share the world as family.

Ceteris Paribus Logic as general framework for Modal and Game Logics

In the article “The Ceteris Paribus Structure of Logics of Game Forms” authors Davide Grossi,

Emiliano Lorini, and Francois Schwarzentruber propose a generalization to the multitude of logic models applied in game and agent formal analysis.

Authors sudied common aspects of formal models used to represent choice and power currently used in modal logics such as ‘Seeing to it that’, ‘Coalition Logic’, ‘Propositional Control’, and ‘Aternating-time tempral logic’ among others.

“The quest to save today’s gaming history from being lost forever” by Kyle Orland

article from arstechnica

“The very nature of digital [history] is that it’s both inherently easy to save and inherently easy to utterly destroy forever.”

“While the magnetic and optical disks and ROM cartridges that hold classic games and software will eventually be rendered unusable by time, it’s currently pretty simple to copy their digital bits to a form that can be preserved and emulated well into the future.

But paradoxically, an Atari 2600 cartridge that’s nearly 40 years old is much easier to preserve at this point than many games released in the last decade. Thanks to changes in the way games are being distributed, protected, and played in the Internet era, large parts of what will become tomorrow’s video game history could be lost forever. If we’re not careful, that is.” read full article