Native’s Tim, Vida’s Mindy, X.Ai’s Amy and other Artificial Intelligence running apps are showing up for this new job role: A.I., assitant.

I wonder if your A.I. assistant would block this article from your Inbox out of fear of losing its job…

Native’s Tim, Vida’s Mindy, X.Ai’s Amy and other Artificial Intelligence running apps are showing up for this new job role: A.I., assitant.

I wonder if your A.I. assistant would block this article from your Inbox out of fear of losing its job…

excerpts from “Friendly Artificial Intelligence: Parenthood and the Fear of Supplantation” by Chase Uy, at Ethical Technology

“…Much of the discourse regarding the hypothetical creation of artificial intelligence often views AI as a tool for the betterment of humankind—a servant to man. (…) These papers often discuss creating an ethical being yet fail to acknowledge the ethical treatment of artificial intelligence at the hands of its creators. (…)

Superintelligence is inherently unpredictable (…) that does not mean ethicists and programmers today cannot do anything to bias the odds towards a humanfriendly AI; like a child, we can teach it to behave more ethically than we do. Ben Goertzel and Joel Pitt discuss the topic of ethical AI development in their 2012 paper, “Nine Ways to Bias the OpenSource AGI Toward Friendliness.” (…)

Goertzel and Pitt propose that the AGI must have the same faculties, or modes of communication and memory types, that humans have in order to acquire ethical knowledge. These include episodic memory (the assessment of an ethical situation based on prior experience); sensorimotor memory (the understanding of another’s feelings by mirroring them); declarative memory (rational ethical judgement); procedural memory (learning to do what is right by imitation and reinforcement); attentional memory (understanding patterns in order to pay attention to ethical considerations at appropriate times); intentional memory (ethical management of one’s own goals and motivations) (Goertzel & Pitt 7).

The idea that an AI must have some form of sensory functions and an environment to interact with is also discussed by James Hughes in his 2011 book, Robot Ethics, in the chapter, “Compassionate AI and Selfless Robots: A Buddhist Approach”. (…) This method proposes that in order for a truly compassionate AI to exist, it must go through a state of suffering and, ultimately, selftranscendance.

(…)

Isaac Asimov’s Three Laws of Robotics are often brought up in discussions about ways to constrain AI.(…). The problematic nature of these laws (…) allow for the abuse of robots, they are morally unacceptable (Anderson & Anderson 233). (…) It does not make sense to have the goal of creating an ethically superior being while giving it less functional freedom than humans.

From an evolutionary perspective, nothing like the current ethical conundrum between human beings and AI has ever occurred. Never before has a species intentionally sought to create a superior being, let alone one which may result in the progenitor’s own demise. Yet, when viewed from a parental perspective, parents generally seek to provide their offspring with the capability to become better than they themselves are. Although the fear of supplantation has been prevalent throughout human history, it is quite obvious that acting on this fear merely delays the inevitable evolution of humanity. This is not a change to be feared, but instead to simply be accepted as inevitable. We can and should bias the odds towards friendliness in AI in order to create an ethically superior being. Regardless of whether or not the first superintelligent AI is friendly, it will drastically transform humanity as we know it.(…).”

In a colaboration by Singapore, USA, and Germany based researchers Akshat Kumar, Shlomo Zilberstein, and Marc Toussaint published “Probabilistic Inference Techniques for Scalable Multiagent Decision Making“.

This paper introduces a new class of algorithms for machine learning applied to multiagent planning. Especifically, in scenarios of partial observation. Application of bayesian inference not being unheard of, this paper advances in determining conditions for scalability.

Pannaga Shivaswamy from LinekdIn and Thorsten Joachims from Cornell University published Coactive Learning. This paper proposes a model of machine learning through interaction with human users.

Users behavior is used to improve system feedback. Their empirical studies indicate this method to benefit movie recommendations and web search.

As seen a few weeks ago, Google research published a visualization tool that would hel us ‘see’ how neural networks outputs would be like.

A new post on Google Research Blog now announced they have decided to open source the code we used to generate these images in an IPython notebook, so now you can make neural network inspired images yourself!

“Advances in theory and computer hardware have allowed neural networks to become a core part of online services such as Microsoft’s Bing, driving their image-search and speech-recognition systems. The companies offering such capabilities are looking to the technology to drive more advanced services in the future, as they scale up the neural networks to deal with more sophisticated problems.

It has taken time for neural networks, initially conceived 50 years ago, to become accepted parts of information technology applications. After a flurry of interest in the 1990s, supported in part by the development of highly specialized integrated circuits designed to overcome their poor performance on conventional computers, neural networks were outperformed by other algorithms, such as support vector machines in image processing and Gaussian models in speech recognition.” read more

“…Photo licensing companies like Shutterstock or Getty Images could let go of their tens of thousand of contributors and tens of millions of stored images and replace them with a smart bot that can instantly create the exact image you are looking for.

Think this idea is crazy? Think again. Already text algorithms create entire human readable articles from raw data they gather around a sporting event or a company’s stock. Automatically. Chances are, you’ve already read a computer generated article without even knowing it.

CGI is now so advanced that entire movies are filmed in front of a green backdrop before being completed with the necessary reality elements we would swear are true. Skilled Photoshop artists can already merge different photos to create a new one, fabricating a true-to-life scene that never existed. IKEA revealed last year that 75% of their catalog photos are not real, but are instead entirely computer generated.” read article at petapixel

by Kai Sheng Tai, Richard Socher, Christopher D. Manning.

Paper introducing a generalization of Long-Short Term Memory networks via tree-structured topology. Publication states that “Tree-LSTMs outperform all existing systems and strong LSTM baselines on two tasks: predicting the semantic relatedness of two sentences (SemEval 2014, Task 1) and sentiment classification (Stanford Sentiment Treebank)”

A companion set of code is also available.

Google Brain researchers Oriol Vinyals and Quoc V. Le puyblished “A Neural Conversational Model“. Thye describe their experiment in having artificial intelligence learning to simulate human conversation.

Good short reading, even if to find examples as:

Human: what year were you born ?

Machine: 1977 .

…

Human: why are we here ?

Machine: i ’m not sure .

or

Human: what is the purpose of living ?

Machine: to live forever .

or

Human: give me some examples of moral actions…

Machine: i ’m not a moralist .

…

Human: be moral !

Machine: be a man !

Article from singularityhub.com DARPA robotics challenge.

x.ai’s Amy is designed to help people arrange meetings. In a way similar to what a personal assistant would do, Amy handles schedule and sort available time and place of meetings.

In order to this Amy inputs is your preferences and communication with meetings guest.

Could be useful, but still on beta version. Ironically it’s not yet readily available – but there’s a waiting list…

Desinger Patrick Mankins article on building trust between people and Artificial Intelligence.

Excerpts below, but read full article – it’s not long anyway:

“Machine learning and cognitive systems are now a major part many products people interact with every da…. The role of designers is to figure out how to build collaborative relationships between people and machines that help smart systems enhance human creativity and agency rather than simply replacing them.

… before self-driving cars can really take off, people will probably have to trust their cars to make complex, sometimes moral, decisions on their behalf, much like when another person is driving.

Creating a feedback loop

This also takes advantage of one of the key distinguishing capabilities of many AI systems: they know when they don’t understand something. Once a system gains this sort of self-awareness, a fundamentally different kind interaction is possible.

Building trust and collaboration

What is it that makes getting on a plane or a bus driven by a complete stranger something people don’t even think twice about, while the idea of getting into a driverless vehicle causes anxiety? … We understand why people behave the way they do on an intuitive level, and feel like we can predict how they will behave. We don’t have this empathy for current smart systems.”

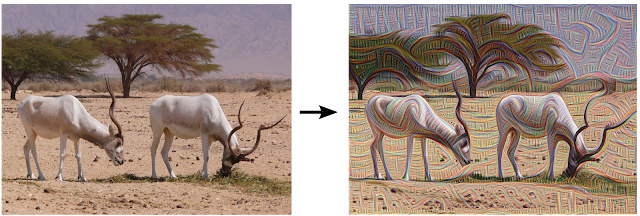

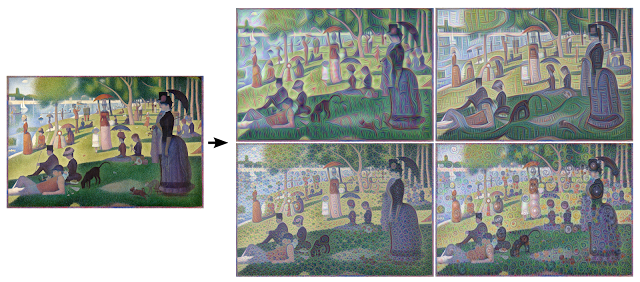

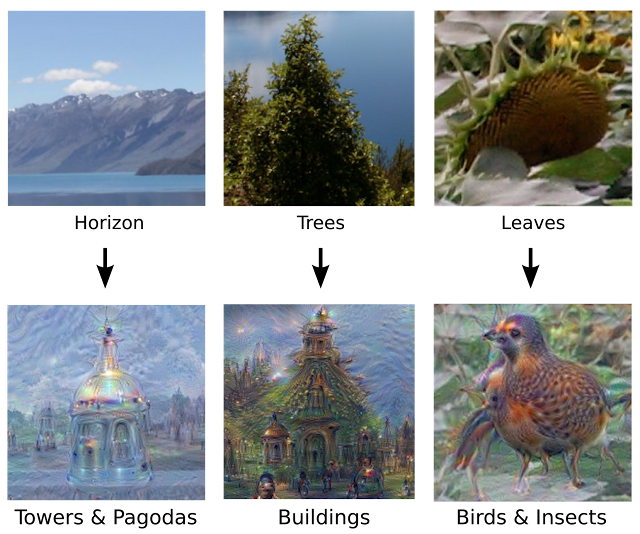

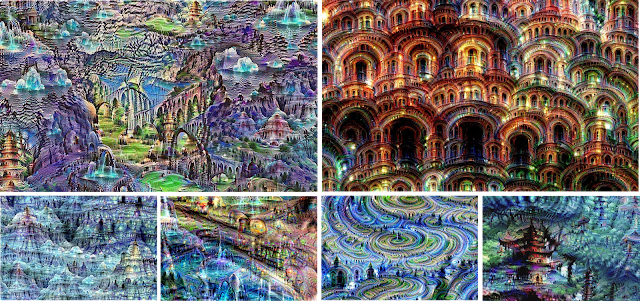

Posted on Google research blog, researchers posted how neural networks trained to classify images interpret and re-interpret image abstractions, as some examples below.

not bad idea from a friend who immediately identified it (especially bottom examples) with this image search:

|

| Left: Original photo by Zachi Evenor. Right: processed by Günther Noack, Software Engineer |

|

| Left: Original painting by Georges Seurat. Right: processed images by Matthew McNaughton, Software Engineer |

|

| The original image influences what kind of objects form in the processed image. |

|

| Neural net “dreams”— generated purely from random noise, using a network trained on places by MIT Computer Science and AI Laboratory. See our Inceptionism gallery for hi-res versions of the images above and more (Images marked “Places205-GoogLeNet” were made using this network). |

As reported in MIT Technology Review “Google DeepMind Teaches Artificial Intelligence Machines to Read” and pointed out by an attentive and informed friend, researchers from University of Oxford and Google Deepmind: Karl Moritz Hermann, Tomas Kocisky, Edward Grefenstette, Lasse Espeholt, Will Kay, Mustafa Suleyman, Phil Blunsom published a paper showing progress in Machine Learning techniques to help computer reading comprehension of meaning.

Deep learning applied different models such as Neural Network and Symbolic Marching to act as reading agents. Agents would then predict an output in form of abstract summary points.

Supervised learning used market, previously annoted data from CNNonline, Daily Mail website and MailOnline news feed.

Here’s the abstract as published:

“Teaching machines to read natural language documents remains an elusive challenge.

Machine reading systems can be tested on their ability to answer questions

posed on the contents of documents that they have seen, but until now large scale

training and test datasets have been missing for this type of evaluation. In this

work we define a new methodology that resolves this bottleneck and provides

large scale supervised reading comprehension data. This allows us to develop a

class of attention based deep neural networks that learn to read real documents and

answer complex questions with minimal prior knowledge of language structure.”

Article from Motherboard:

“Since the dawn of computer science, humans have dreamt of building machines that can carry our memories and preserve our minds after our fleshy bodies decay. Whole brain emulation, or mind uploading, still has the ring of science fiction. And yet, some of the world’s leading neuroscientists believe the technology to transfer our brains to computers is not far off.

But if we could upload our minds, should we? Some see uploading as the next chapter in human evolution. Others fear the promise of immortality has been oversold, and that sending our brains off to the cloud without carefully weighing the consequences could be disastrous.

(…)

“The mind is based on the brain, and the brain, like all biology, is a kind of machinery,” (,,,) “Which means we’re talking about information processing in a machine.”

In the article “The Ceteris Paribus Structure of Logics of Game Forms” authors Davide Grossi,

Emiliano Lorini, and Francois Schwarzentruber propose a generalization to the multitude of logic models applied in game and agent formal analysis.

Authors sudied common aspects of formal models used to represent choice and power currently used in modal logics such as ‘Seeing to it that’, ‘Coalition Logic’, ‘Propositional Control’, and ‘Aternating-time tempral logic’ among others.

In this paper scientists from the University of Leeds introduce a new structure for supervised relational learning of event models.

Inductive Logic Programming and other machine learning techniques are adapted to use video data. Efficiency balances generalization and over especification deploying layers of differentiated hierarchy of object types.

Experimental results presented suggest that the techniques are suitable to real world scenarios.

In this paper “A U.S. Research Roadmap for Human Computation“, authors Pietro Michelucci, Lea Shanley, Janis Dickinson, Haym Hirsh studies how internet and web-based mutliple contributors to bring great knowledge achievements. Duolingo, Wikipedia being high profile examples.

Human computation is the proposed new field to study how scientific research collaboration may work on new platforms.